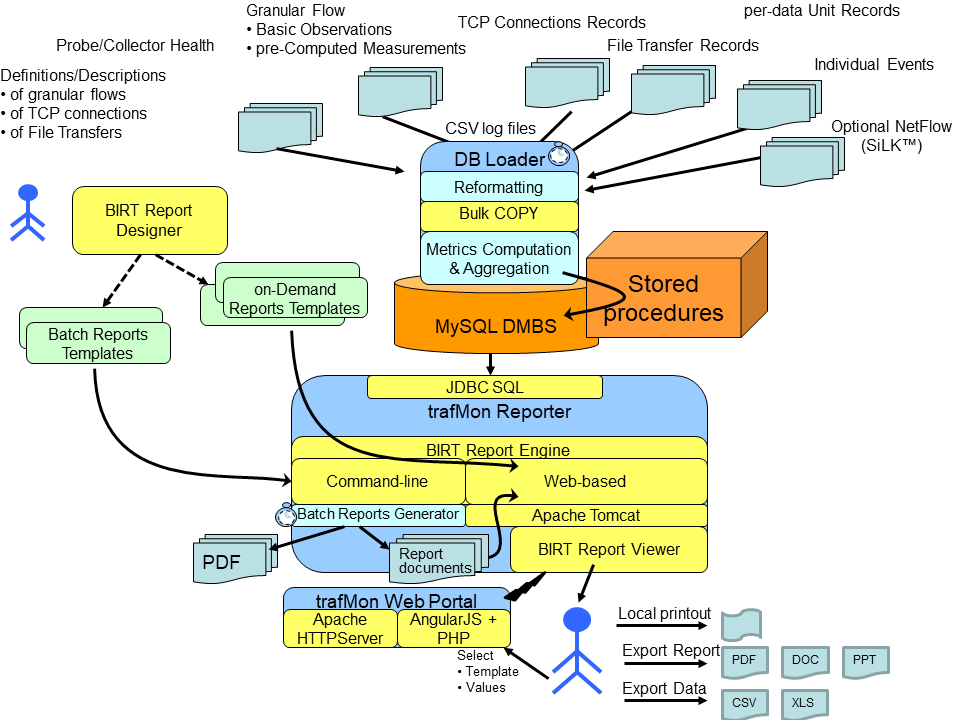

Components

The Database

Several types of ASCII log files are produced by the trafMon collector, typically every 5 minutes:

- Flow instance descriptions,

- histogram slices definitions of metric instances,

- IPv4 counters,

- IPv4 sizes distribution,

- ICMPv4 counters,

- UDP counters,

- TCP counters,

- TCP connections,

- FTP counters,

- FTP transfers,

- Two-way round-trip delays,

- One-way latencies

- or one-way hops definitions

- and one-way hops timestamps

- One-way counters

- or, separately, one-way lost, one-way missed, one-way dropped counters

- trafMon probes and collector events

When also using the NetFlow data feed with CERT® SiLK™, the NetFlow records log is also produced there, once per hour.

Every 10 minutes, typically, those files are moved, pre-processed, merged and bulk loaded, mostly in (non visible) temporary files of the database. NetFlow pre-processing is rather heavy: the volumes of every flow are distributed equally over every minute of their respective duration, so as to be inline with corresponding IPv4 counters produced by the probes.

The structure of temporary and persistent tables is defined in the `trafMon_template` MySQL database, which also contains the trafMon stored procedures and the protocol table defining the known application service TCP and UDP port numbers, and their optional precedence.

When the target MySQL database (named `trafMon` by default) doesn’t yet exist, it is created, then each persistent destination table is also created when initial data are available for it. For counters, when pre-aggregated data already existed for some time intervals (minute, hour, day) with fresh data, the aggregated values are updated. For those new time intervals, computed new aggregated values are simply appended to the persistent tables.

Most tables are physically split into partitions of successive time intervals of appropriate duration. New partitions are automatically created. So, when some ancient fine-grain data become obsolete, it suffices to the corresponding partition(s) without delete query that heavily loads the MySQL server. A MySQL stored procedure `Partition_drop()` exists to remove all partitions of a given table with data older than a given delay.

After each regular data load, the pre-defined known IP address ranges defining Activities and Locations are reloaded, and the unresolved IP addresses are looked up for DNS name retrieval.

Once per week (maybe more seldom, the full set of DNS names are queried again (big burst of DNS transactions by the trafMon central server!).

Once a day, the volumes of passive FTP data connections are merged with those of corresponding FTP session flows. Then the aggregation per Activity/Location/peer Location or Country is conducted.

Once a day, but later, the same aggregation is performed on NetFlow data.

Those tasks are either Python scripts or MySQL stored procedures jobs scheduled with the crontab utility.

The generation of some reports imposes to create temporary persistent tables. These cannot be temporary, as we don’t have control over the session with the database, and we don’t have an easy trigger in the report generator process to perform the removal. Hence a MySQL stored procedure `Drop_working_tables()` is available to drop all such woring tables older than a given delay.

Those reports are foreseen for installation within the BIRT Runtime Engine and Report Viewer J2EE application in Apache Tomcat server environment. This allows the use to have on-demand generation of Web reports that can be also printed/saved as PDF or other document format. The user can also download underlying dataset as CSV for import in Excel or other spreadsheet.

For simplifying the selection of parameters for on-demand report generation, a basic javaScript/AngularJS/JQuery and PHP web application has been produced for the Apache HTTP Server, which displays a dynamic top-screen menu bar and launches the Tomcat/BIRT report generation for display in the frame below.

Two example scripts, respectively for synthesis reports and for protocol details reports, are also provided that automatically generate PDF report files in batch mode.